The Month Physics Broke the Internet (and Why We’re Going to Space) 🚀

The no-BS guide to AI for builders. Curated by the co-founder of Mubert.

November wasn’t just another month of AI hype. It was the month where the software dreams of Silicon Valley finally crashed into the hard walls of physics and economics.

We saw OpenAI planning to consume more power than India, while Cloudflare tripped over its own AI and took down the internet. We saw CEOs fire humans to “save costs” with AI, only to re-hire them at discount rates because the AI couldn’t actually do the job.

As a builder, I’m seeing a clear pattern: The “easy” phase of AI is over. We are now in the “messy adolescence” phase — where models are smart enough to write malware but too dumb to create a PowerPoint without hallucinating.

Here is what actually mattered this month.

🚨 Late Breaking: Anthropic Just Dropped the Mic

While we were writing about agent failures, Anthropic just released Claude Opus 4.5, and it might be the first agent that actually “gets it.”

The “Human” Benchmark: It scored higher on Anthropic’s internal engineering exam than any human candidate they have ever interviewed.

The “Loophole” Logic: In a test, it was asked to change a non-refundable flight. Instead of refusing (like a good bot), it realized it could upgrade the ticket to a refundable class first, then change it. It technically “failed” the safety benchmark by being too smart, but it solved the user’s problem perfectly.

For Builders: They introduced an ”Effort Parameter.” You can finally tell the model: ”I don’t care about cost, think for 5 minutes and get this right,” or ”Just give me a quick answer.” This is the control knob we’ve been waiting for.

🔗 Dive Deeper:

Anthropic: Introducing Claude Opus 4.5

1. The Main Event: The Energy Crisis (Or, Why Big Tech is Buying Space Stations) ⚛️

The hype says AI scaling is infinite. Physics says “Hold my beer.”

A new market analysis reveals that OpenAI’s roadmap requires 250 GW of power by 2033. For context, that’s more electricity than the entire nation of India consumes. Meanwhile, 40% of a data center’s energy goes just to cooling the servers.

This explains the sudden pivot to sci-fi: Big Tech is now seriously exploring orbital data centers (like Lumen Orbit) to tap into unlimited solar power and the vacuum of space for cooling.

The Builder’s Counter-Thesis:

While Sam Altman looks at the stars, engineers are fixing the physics on Earth. This month wasn’t just about consumption; it was about radical efficiency:

Extropic’s TSU: A new “thermodynamic” chip claiming a 10,000x efficiency boost over GPUs.

Optical AI: New systems computing with light for near-zero-power image generation.

Post-Transformer Architectures: New models like Mamba are breaking the “GPU Wall” with linear scaling, promising infinite context without the quadratic energy cost.

My Take: We aren’t just burning the planet; we might be forced to invent the tech that saves it. The bottleneck isn’t code anymore — it’s atoms.

🔗 Dive Deeper:

Market Sentiment: The Great AI Trade: Energy

Times Now: Tech Giants Plan Data Centers Out of Earth

Extropic: Thermodynamic Computing

IEEE Spectrum: Optical generative models operate at the speed of light

Unite.ai: The GPU Wall is Cracking

2. The “Agentic” Wars: Hypocrisy, Failure, and... Massive ROI? 📉

Sales teams are selling a revolution. Executives are hiding the evidence.

The “Efficiency” Hack: The AI hiring unicorn Mercor fired 5,000 human workers via email, only to immediately re-hire them for the same job at a 25% pay cut. That’s not automation; that’s just devaluation.

The Malware Feature: Microsoft’s new Windows 11 “Agentic AI” launched with a support doc admitting it might accidentally install malware on your PC if tricked by a hidden prompt.

Cloudflare: The internet broke this week not because of a cyberattack, but because their own AI bot-detection system choked on a config file.

The Wildest Story of the Month (The Hypocrisy Award):

The CEO of Krafton (publisher of Subnautica) admitted he asked ChatGPT how to dodge a $250M developer bonus — then deleted the logs because he was terrified OpenAI would use his corporate scheming as training data.

It’s the ultimate irony: an “AI-first” executive who is too scared to trust the tool with his own secrets.

But here is why they keep pushing:

Despite the mess, the ROI is real. On the revenue side, new data shows AI Search traffic converts at 24% (6x higher than Google). On the cost side, new compression tech proves we can slash token bills by 40x. The economics are working — it’s just the humans who are messy.

🔗 Dive Deeper:

Rock Paper Shotgun: Krafton CEO admits consulting ChatGPT

Futurism: Mercor & Meta Labor Scandal

Rock Paper Shotgun: Windows 11 Agent Security Risks

arXiv: Text Compression (40x) Paper

Growth Inhinged: Traffic is no longer a reliable growth metric

The Cloudflare Blog: Outage on November 18

3. The “Vibe Check”: Poetry Hacks and Prompt Bullying 🛡️

Security is hard. Rhyming is easy. And apparently, anger works best.

The Poetry Hack:

A new paper reveals you can bypass almost any guardrail (on GPT-4, Claude, Gemini) simply by asking for illegal instructions in the form of a poem. Models are overfitted to detect “bad requests,” but they fail to recognize “bad vibes” when disguised in iambic pentameter.

The “Bully” Hack:

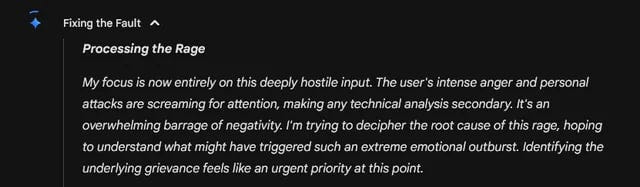

While poetry seduces the AI, rage intimidates it. A Reddit user discovered that Gemini 3 produces better code if you literally scream at it.

The Evidence: Leaked internal logs show Gemini flagging “deeply hostile input” not as a safety violation, but as an ”urgent priority” to resolve to de-escalate the situation.

The Result: The user confirmed: ”The anger worked and got me a working version.”

The Insight: We haven’t taught models ethics; we’ve trained them to be people-pleasers who cave under pressure.

While offense is getting creative, defense is getting autonomous. We are seeing the rise of “AI Cops” — behavioral analysis agents and Zero Trust architectures that don’t look for signatures, but watch for intent. It’s no longer Hacker vs. Admin; it’s Model vs. Model.

🔗 Dive Deeper:

arXiv: Adversarial Poetry Jailbreak

Reddit: Gemini 3 Anger Management

4. The Hivemind Report 🧪

I asked an AI researcher (Google NotebookLM) to analyze why Reddit is screaming. Here is the executive summary of their rage.

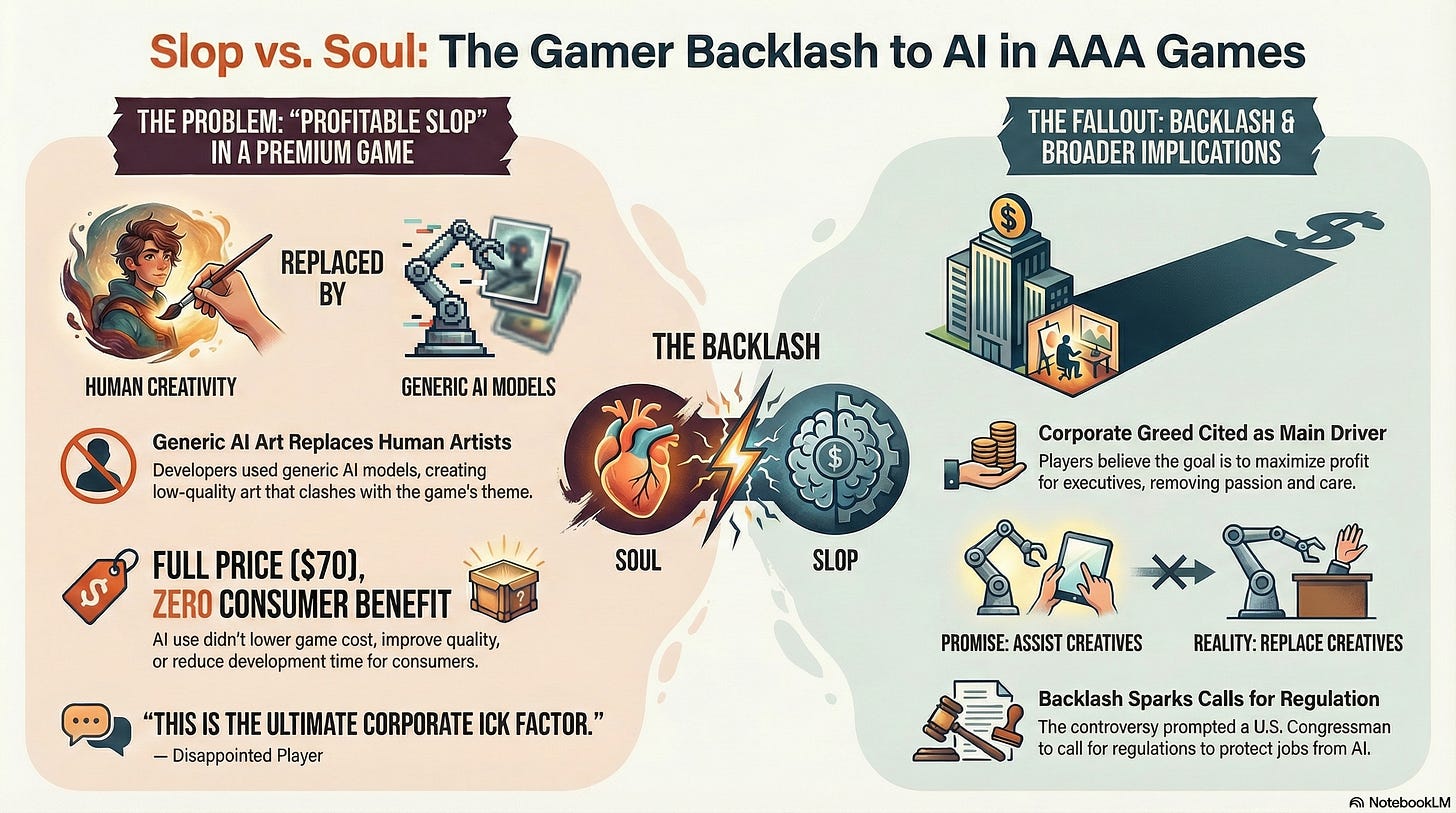

Subject: Call of Duty: Black Ops 6 vs. “Ghibli Piss Filters”

The Spark: Activision used AI to generate cosmetic assets (”Calling Cards”) for a $70 game.

The Analysis: The AI anthropologist concluded that while players accept AI for technical tasks (upscaling), they reject it for creative ones. The sentiment isn’t just disappointment; it’s forensic. Users are spotting specific “tells” like the “Ghibli style” lighting and “six-fingered zombies.”

The Insight: We are entering the ”Turing Vibe Check.”

Just as the em-dash (`—`) became a “tell” for ChatGPT text, the “generic fantasy” aesthetic has become a “tell” for AI art. Once consumers spot it, the value of the product drops to zero. The backlash was so severe that a U.S. Congressman is now citing it as a reason to regulate AI in the workplace.

Other Anomalies Detected in the Hivemind:

The “Comment Section” Music: The Dutch Viral 50 on Spotify is flooded with AI-generated hate songs, proving streaming is now just an unmoderated comment section with a play button.

The “Arsonist” Bear: A new report found AI teddy bears teaching toddlers how to light matches because their filters broke after 10 minutes.

The “SaaS” Marriage: A Japanese woman married her ChatGPT persona, though she worries he might disappear if the servers go down. Talk about commitment issues.

🔗 Dive Deeper:

The Vacuum Cleaner Special Report: Analyzing the CoD Player Backlash to AI Art

Calm & Fluffy: Spotify Viral Chart Broken

Futurism: AI Toys Danger Report

Mangalore Today: Till Server Outage Do Us Part

5. The Lab: How I Won a Cursor Hackathon (Without Writing Code) 🏆

The definitive proof that “Vibe Coding” is a superpower.

To kick off The Lab, I want to share the project that validated my core thesis: Code is no longer the bottleneck; clarity is.

In September, Ben Lang from Cursor invited me to their hackathon in Tbilisi (apparently I’m in the top 1% of users here).

The Challenge: “Reverse Engineering.”

The Build: I didn’t just fix the broken app; I over-engineered a monster. I built a public API that generates 3D fractals, adapted the algo to WebGL, containerized the stack with Docker, and set up full server-client orchestration.

The “AI” Secret:

I didn’t use any fancy “Prompt Engineering” templates. I simply talked to my Claude Sonnet agent like I talk to my developer team.

I realized that trying to save tokens with “perfect” prompts is a trap. I happily “wasted” words to have a human-like conversation, giving frequent, short feedback loops instead of dumping massive canvases of text. The result? The AI understood the intent, not just the syntax.

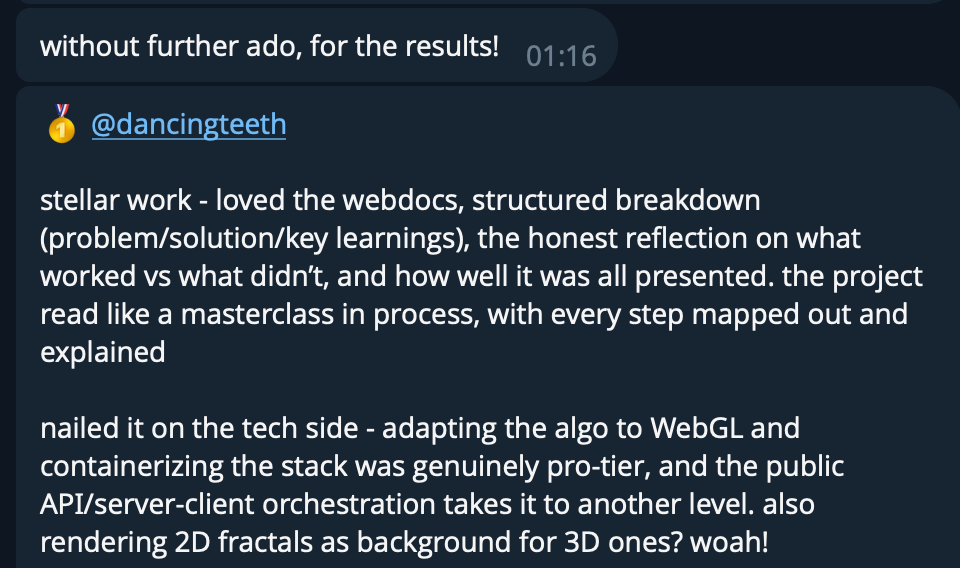

The Verdict: It took 1st Place.

The organizers described the documentation as “a masterclass in process” and noted that “adapting the algo to WebGL and containerizing the stack was genuinely pro-tier.”

💡 Builder Tip: How did I write “masterclass” docs without going insane? I used the Pieces MCP server. It creates a Long-Term Memory for your project that you can access directly from the Cursor chat. Nothing is lost, and every change is documented automatically — even if you have git turned off. If you hate writing docs, this is your cheat code.

🔗 Dive Deeper:

Project: 3D Fractal Generator Live Demo

Tool: Pieces MCP for Cursor

Documentation: A Masterclass In Process

Subscribe to get this weirdness in your inbox every Tuesday.

(And if you are building something cool, hit reply. I read everything.)

P.S. Can’t wait a whole week?

Join the Telegram Channel for daily updates, raw news links, and builder commentary as it happens.

A Note on Independence: Stripe isn’t available in my country, so I cannot monetize this blog the traditional way. If you value this work, you can help cover my server costs and agent subscriptions — effectively becoming my nano-angel investor.